The 45-Minute User Session

One morning, I reviewed a Hotjar report and discovered a user session lasting 45 minutes—far longer than the typical 3-5 minutes, where users seemed to be testing the waters of our newly-launched product. Curious and excited to see how someone might be using our tool for real work, I eagerly watched the session playback. Initially, I saw progress, but as the session continued, the user’s actions became erratic: searching, hesitating, backtracking. Despite having modeled something, they never clicked the "run simulation" button. Why not? And how many others were struggling with the same issue?

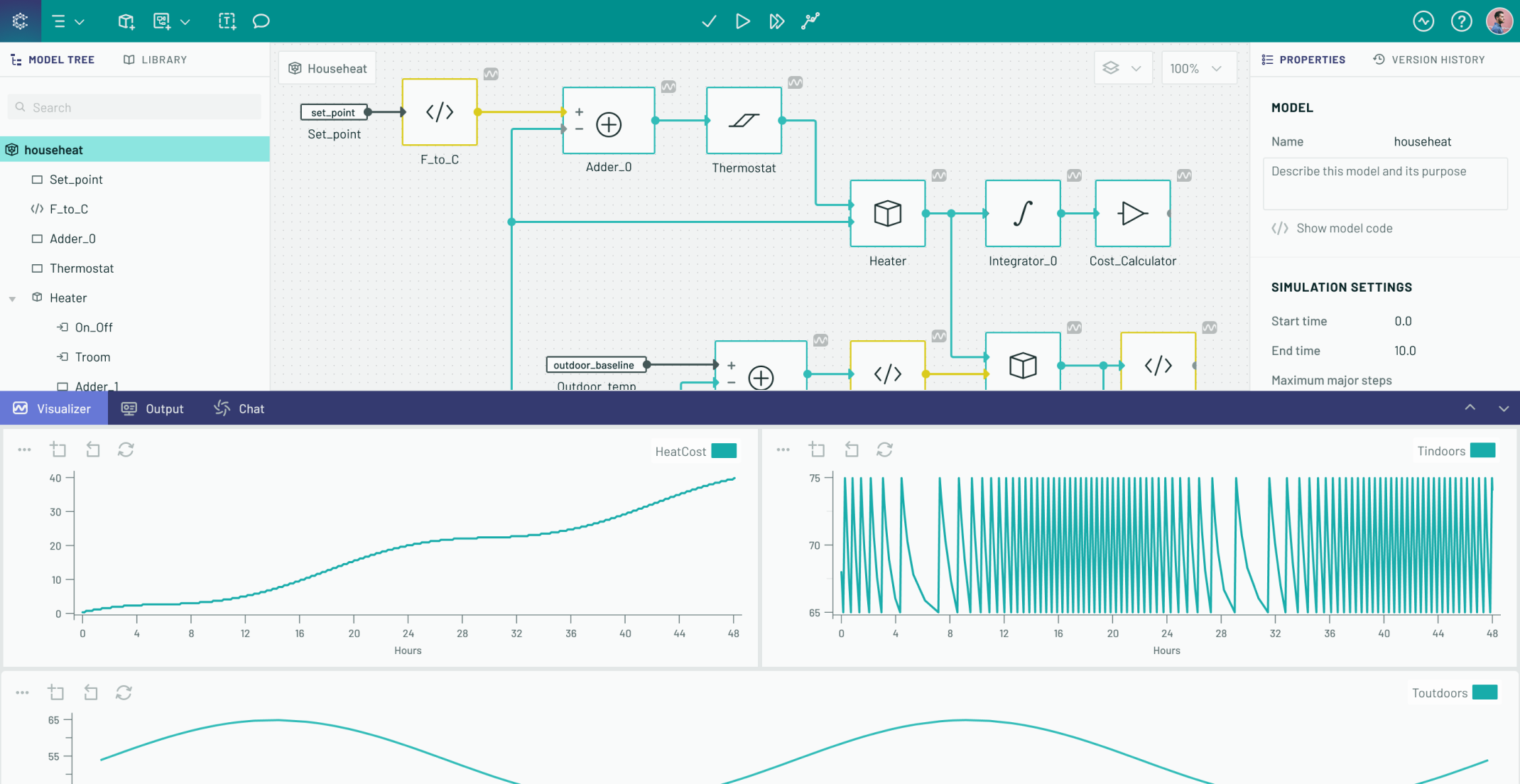

Background: Understanding Collimator’s Product

Our product at Collimator is a modeling and simulation tool that allows engineers to translate equations into visual block diagrams. These diagrams become functional models of the intended system to be built. In order to validate their models, engineers run simulations and analyze system outputs. Running a simulation is crucial—if users aren’t running simulations, they’re either distracted, stuck on modeling, or unable to figure out how to initiate a simulation. This session suggested a deeper problem: users could build models but weren’t taking the next step.

Analyzing User Behavior

I reviewed additional user sessions and noticed a pattern: many users didn’t run simulations. I worked with the engineering team to examine our metrics, which revealed that most sessions included no simulations. We decided to track this more closely, but our existing analytics fell short, so I turned to hypothesis testing.

Hypotheses and Exploration

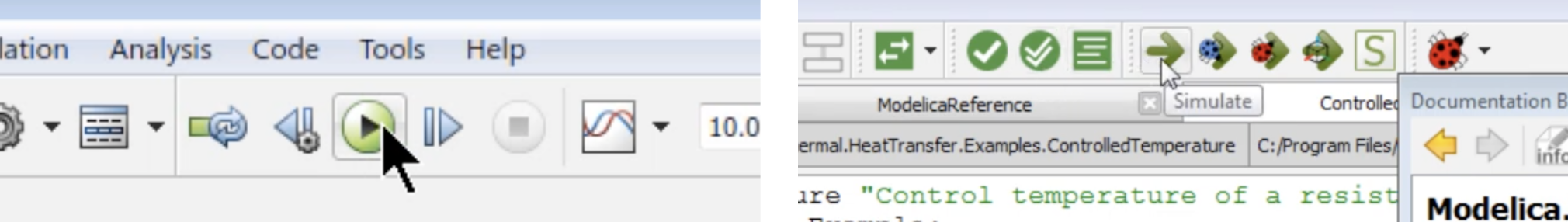

Hypothesis 1: The Design Wasn’t Intuitive

I had designed the "Play" button based on the concept of scrubbing through simulation time, akin to a video player. Early tests with experienced engineers had shown the button icon to be intuitive and in line with competitor's approaches, but maybe the metaphor wasn’t clear enough for all users.

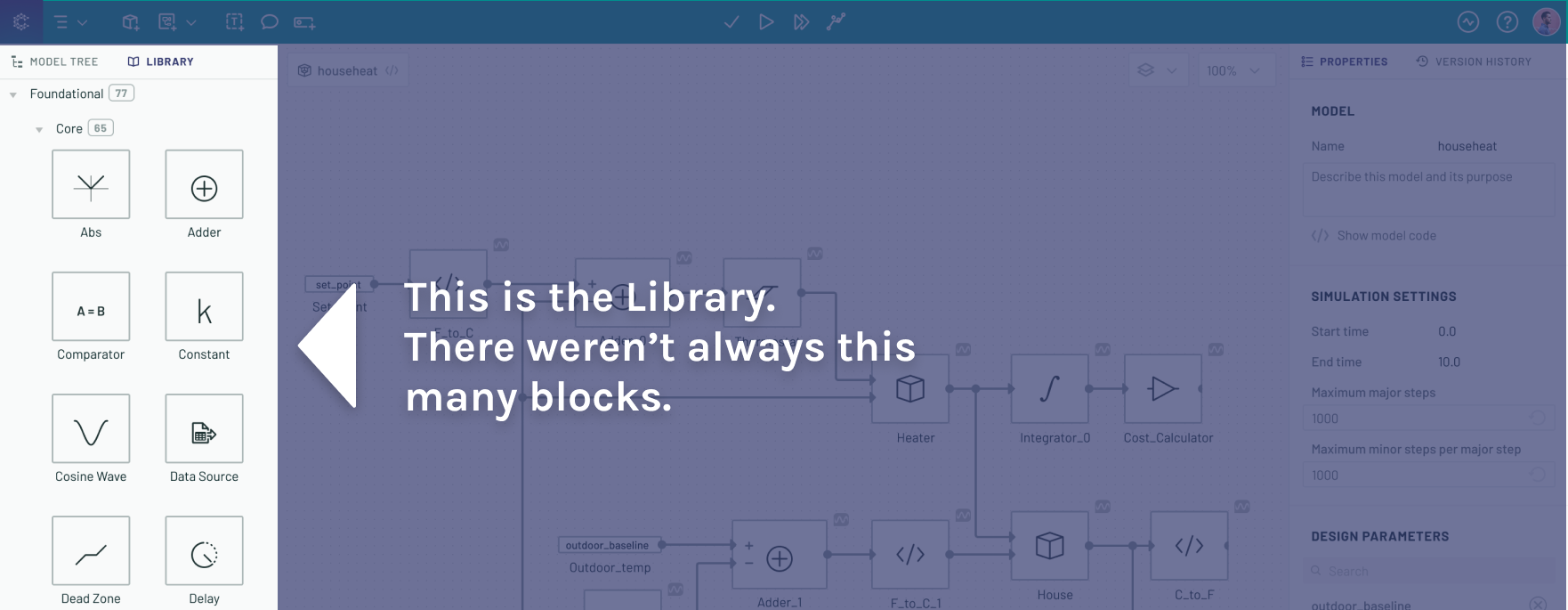

Hypothesis 2: Missing Features

Reviewing sessions, I saw users who logged in, explored our block library, then quickly left—likely because the specific blocks they needed weren’t available. This suggested that some sessions weren’t relevant to the simulation issue but pointed to other needs.

Hypothesis 3: Poor Documentation

I considered that users might be struggling with running simulations because they couldn’t find clear instructions. A quick review showed that the documentation was comprehensive and accessible through multiple pathways, it still might not have been meeting user needs. Unfortunately, our analytics provided inconclusive insights into how effectively users were finding and using these resources.

Hypothesis 4: Misaligned User Expectations

Reflecting on user behavior, I realized that our decision to let users run simulations without selecting signals for plotting might have been counterproductive. In other tools, signal selection was required before running a simulation, and users might have expected this step.

Iterating Toward a Solution

Introducing a prompt for signal selection

The first solution I proposed was to prompt users to select signals before running a simulation, guiding them through a step that might have been missed. However, after discussion with the team, I decided that adding extra clicks could compromise a seamless user experience. I continued to explore more intuitive solutions before increasing user effort.

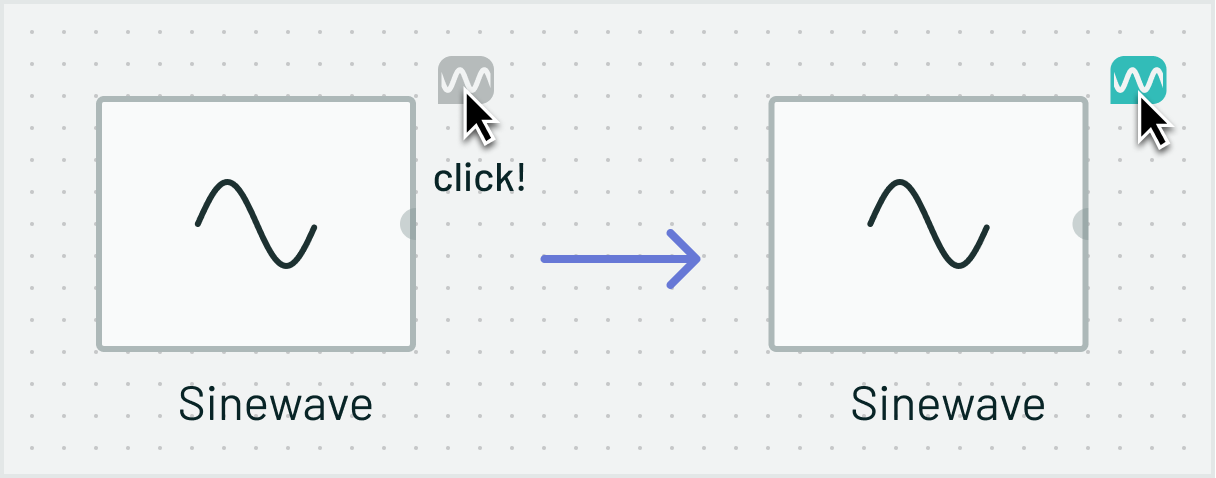

Testing alternative visual metaphors

The existing toggle button for marking outputs had an icon intended to resemble both an oscilloscope screen and a speech bubble. In the rush to launch, I hadn’t fully validated its effectiveness. To address this, I quickly sketched out alternative icon concepts and tested them with our engineers, asking questions like, “What does this icon look like to you? If you saw it on a button, what action would it suggest?” Despite exploring various options, none of the alternatives proved more intuitive than my original design.

Enhancing Onboarding with Contextual Prompts

If the icon wasn't the issue, could better guidance help? I implemented context-sensitive prompts that would guide users through the simulation process exactly when they needed it. Using subtle tooltips and brief video explanations, these prompts appeared after a brief delay if users seemed stuck, nudging them towards actions like running simulations. This adjustment led to a 200% increase in simulation runs. It wasn’t a complete solution, but it was enough to deprioritize the issue for the time being.

A Surprising Resolution

A few weeks later, I revisited the metrics and found that the issue of users struggling to run simulations had largely disappeared—even though our onboarding prompts had become broken (which I promptly reported as a bug). It turned out that user-generated tutorials on YouTube had filled the gap, becoming the go-to resource for new users. We reached out to several of these creators to form ongoing partnerships. Not only did we contribute to improving the quality of the content, we also received valuable feedback on our product, and reports of unmet needs in the community. It also signaled to us that even though our platform was young, people were already talking about it—and so should we.

The Impact

The two-pronged approach was clearly working. Improving our onboarding flow was a clear improvement, and as our social media presence grew, new user sign-ups skyrocketed. We continued to bolster our growth with product demos, new feature announcements, and community partnerships, leading us to double our user base in a month.

Key Learnings

- You don't have to test every hypothesis: It's a good idea to generate a lot of ideas for exploration, but you can probably de-prioritize or discard unlikely solutions.

- The Perfect Metaphor Isn’t Always Necessary: Sometimes, it’s better to focus on creating a clear, teachable interaction than obsessing over finding the perfect design metaphor.

- Embrace Community-Driven Solutions: The rise of user-generated tutorials reminded us that a strong user community can be as effective as in-product guidance in driving product adoption.